Overview

In reinforcement learning (RL), a branch of gadget learning, an agent gains decision-making talents through following commands and receiving incentives or punishments. It is an effective approach for coaching artificial intelligence (AI) systems, allowing them to discover excellent behaviors through experimentation. This method has grown to be a mainstay of modern AI research because of its considerable fulfillment in a whole lot of domain names, which includes gaming and robotics.

Reinforcement gaining knowledge of: What is it?

Machine getting to know studies on reinforcement studying specializes in how sellers have to behave in a given surroundings to maximize a concept known as cumulative reward. Reactive studying (RL) entails studying from the consequences of moves in place of specific examples, in comparison to supervised mastering, wherein the model is trained on a dataset with classified inputs and outcomes. This brings RL towards the ways in which both people and animals learn—this is, via the use of a machine of incentives and penalties to modify conduct.

The goal of supervised studying is to apply instance pairs to learn a mapping from inputs to outputs. Unsupervised getting to know, alternatively, appears for innate systems or hidden patterns in entering information without the want for explicit output labels. One one-of-a-kind feature of reinforcement learning is its emphasis on the stairs an agent has to take to perform an aim—frequently in difficult and dynamic situations.

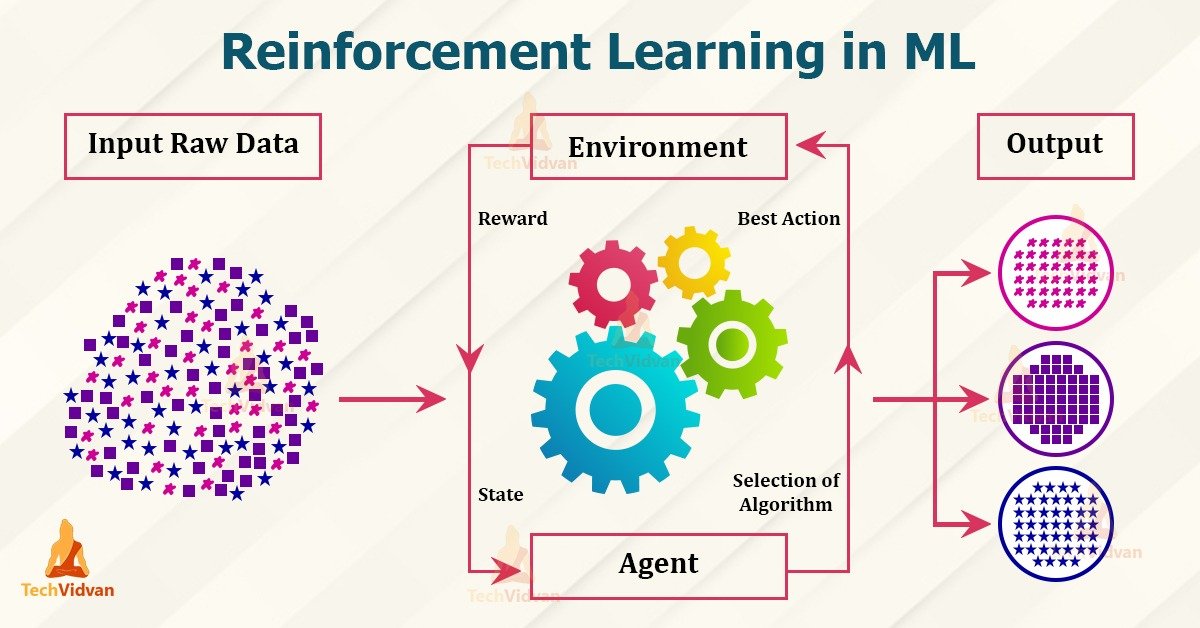

The Operation of Reinforcement Learning

The interaction among an agent and its environment is the essential thing of reinforcement mastering. This trade is divided into a couple of vital elements:

Agent: The choice-maker or learner.

Environment: The out of doors device that the agent communicates with.

State: An instance of the environment’s modern nation.

Action: The entirety of the agent’s feasible moves.

Reward: The environment’s evaluation of the motion finished.

After observing the modern-day country and choosing a movement, the agent is rewarded and given the right of entry to a brand new nation. Finding a coverage that maximizes the anticipated cumulative advantage over time is the purpose. This includes striking a balance between opposing desires: exploitation (selecting activities that are regarded to supply large rewards) and exploration (attempting new behaviors to learn their effects).

Exploration vs. Exploitation within the Learning Process

The alternate-off between exploration and exploitation is a vital element of RL. Learning requires experimentation, which is making an attempt out new matters to look at how they paint. The agent must, however, also take advantage of known activities that result in the best rewards, a good way to maximize advantages. One of the biggest demanding situations in RL is finding the ideal stability between those two.

Value Functions and Policies

A coverage maps states to movements and describes how the agent behaves at a specific second in time. Value features examine the agent’s probability of current in a sure kingdom (state value) or carrying out a selected motion in a given state (movement price). To decide the great direction of motion to maximize the cumulative praise, the agent consults those fee capabilities.

Algorithms: Deep Q-Networks (DQN), SARSA, Q-Learning

Numerous algorithms have been created to deal with RL issues. A model-loose technique known as Q-Learning updates Q-values in response to rewards so as to decide the quality coverage’s value. Another properly-liked approach, SARSA (State-Action-Reward-State-Action), modifies the Q-values in keeping with the action surely carried out, making it greater on-coverage.

By fusing deep neural networks and Q-Learning, Deep Q-Networks (DQN) allow the agent to manipulate high-dimensional kingdom spaces. DeepMind is renowned for the usage of this method to educate an AI how to play Atari games to a superhuman degree.

Reinforcement Learning Types

Positive and terrible reinforcement are the two fundamental classes of reinforcement getting to know.

Positive Confirmation

When the favored motion is exhibited, effective reinforcement entails rewarding the agent, which motivates them to repeat the pastime. Increasing the frequency and intensity of the targeted conduct is a successful outcome of this method.

Adverse Rewarding

When the favored conduct is exhibited, terrible reinforcement includes getting rid of an unpleasant input and motivating the agent to copy that behavior. Negative reinforcement increases the preferred conduct through eliminating unfavorable situations, in contrast to punishment, which attempts to lower undesirable behaviors.

Reinforcement Learning Applications

Applications for reinforcement studying are severa and span many exclusive fields:

Automation

Robotics makes use of reinforcement gaining knowledge of (RL) to teach machines to carry out intricate responsibilities such as taking walks, gripping things, and navigating their environment. Through engagement with the tangible environment, robots acquire the ability to modify diverse instances and enhance their capability progressively.

Engaging in Gaming

In the world of gaming, reinforcement studying has seen extremely good success. Notable instances consist of OpenAI Five surpassing professional Dota 2 players and DeepMind’s AlphaGo defeating the world champion Go participant. These accomplishments show how powerful RL is at triumphing in difficult, strategic games.

Driverless Cars

In order to assemble self reliant vehicles, reinforcement mastering is essential. Self-using motors can research safe and powerful strategies via RL algorithms’ simulation of using scenarios, which enhances their capability to deal with visitors in real life.

Medical Care

Reverse engineering (RL) is utilized in healthcare to beautify remedy techniques, customize medicinal drugs, and enhance patient effects. For instance, by continuously learning from patient facts, RL can assist in identifying the gold standard route of motion for controlling persistent sicknesses.

Finance

RL algorithms are used in finance to manage portfolios, create buying and selling techniques, and maximize investment picks. RL can assist in maximizing returns and reducing risks with the aid of evaluating marketplace statistics and drawing lessons from previous events.

Obstacles in Reinforcement Education

Even with its achievements, reinforcement getting to know nonetheless has numerous drawbacks.

Sample Effectiveness

In order for RL algorithms to learn nicely, a number of interactions with the surroundings are generally important, which is probably complicated in actual-global situations. Enhancing the effectiveness of samples is a crucial area to take a look at.

Scalability

The more complicated the surroundings are, the greater processing energy is needed for reinforcement mastering. One of the main demanding situations in gadget learning is creating scalable algorithms that may manage large nation and action domain names.

Trade-off among Exploration and Exploitation

In real life, placing a balance between discovery and exploitation is still a first-rate impediment. While excessive exploitation may produce less-than-ideal guidelines, immoderate exploration can cause studying to move slowly.

Ethical Points to Take

The use of reinforcement learning (RL) algorithms in critical programs gives rise to ethical questions about duty, transparency, and justice. For RL to be used responsibly, these troubles should be resolved.

Reinforcement Learning’s Future Trends

A number of trends are influencing the development of reinforcement studying, which bodes properly for the future:

Progress in Architectures and Algorithms

The goal of ongoing studies is to create RL algorithms that are greater dependable and efficient. It is anticipated that advancements in neural community topologies, including deep reinforcement gaining knowledge of and meta-learning, would improve the overall performance of RL systems.

Combining AI with Other Domains

It is predicted that the fusion of reinforcement mastering with other branches of synthetic intelligence, such as computer imaginative and prescient and natural language processing, might create new avenues for the development of sensible structures capable of more complicated notion and interplay with their environment.

Implementation Challenges within the Real World

While RL has tested high-quality effectiveness in simulated environments, there are widespread barriers whilst the usage of it in actual-global packages. For RL to be extensively used, it’s vital that problems with robustness, protection, and interpretability be resolved.

Reinforcement Learning applications in engineering

In the engineering frontier, Facebook has developed an open-source reinforcement learning platform — Horizon. The platform uses reinforcement learning to optimize large-scale production systems. Facebook has used Horizon internally:

- to personalize suggestions

- deliver more meaningful notifications to users

- optimize video streaming quality.

Horizon also contains workflows for:

- simulated environments

- a distributed platform for data preprocessing

- training and exporting models in production.

A classic example of reinforcement learning in video display is serving a user a low or high bit rate video based on the state of the video buffers and estimates from other machine learning systems.

Horizon is capable of handling production-like concerns such as:

- deploying at scale

- feature normalization

- distributed learning

- serving and handling datasets with high-dimensional data and thousands of feature types.

Reinforcement Learning in news recommendation

User preferences can change frequently, therefore recommending news to users based on reviews and likes could become obsolete quickly. With reinforcement learning, the RL system can track the reader’s return behaviors.

Construction of such a system would involve obtaining news features, reader features, context features, and reader news features. News features include but are not limited to the content, headline, and publisher. Reader features refer to how the reader interacts with the content e.g clicks and shares. Context features include news aspects such as timing and freshness of the news. A reward is then defined based on these user behaviors.

Reinforcement Learning in gaming

Let’s look at an application in the gaming frontier, specifically AlphaGo Zero. Using reinforcement learning, AlphaGo Zero was able to learn the game of Go from scratch. It learned by playing against itself. After 40 days of self-training, Alpha Go Zero was able to outperform the version of AlphaGo known as Master that has defeated world number one Ke Jie. It only used black and white stones from the board as input features and a single neural network. A simple tree search that relies on the single neural network is used to evaluate positions moves and sample moves without using any Monte Carlo rollouts.

Reinforcement Learning in robotics manipulation

The use of deep learning and reinforcement learning can train robots that have the ability to grasp various objects — even those unseen during training. This can, for example, be used in building products in an assembly line.

This is achieved by combining large-scale distributed optimization and a variant of deep Q-Learning called QT-Opt. QT-Opt support for continuous action spaces makes it suitable for robotics problems. A model is first trained offline and then deployed and fine-tuned on the real robot.

Google AI applied this approach to robotics grasping where 7 real-world robots ran for 800 robot hours in a 4-month period.

Real-time bidding— Reinforcement Learning applications in marketing and advertising

The handling of a large number of advertisers is dealt with using a clustering method and assigning each cluster a strategic bidding agent. To balance the trade-off between the competition and cooperation among advertisers, a Distributed Coordinated Multi-Agent Bidding (DCMAB) is proposed.

In marketing, the ability to accurately target an individual is very crucial. This is because the right targets obviously lead to a high return on investment. The study in this paper was based on Taobao — the largest e-commerce platform in China. The proposed method outperforms the state-of-the-art single-agent reinforcement learning approaches.

Case Studies

AlphaGo from DeepMind, Google

One of the most well-known makes use of reinforcement learning is AlphaGo, which was created with the aid of Google DeepMind. AlphaGo proved that RL is able to deal with difficult obligations when it defeated the sector champion Go participant with the aid of fusing RL with deep neural networks.

The Dota 2 Bot via OpenAI

Another first rate use of RL is the Dota 2 bot evolved by OpenAI. The bot validated the ability of reinforcement mastering in actual-time method video games by means of outperforming professional gamers and mastering to play the sport at a superhuman stage through training in a digital surroundings.

Healthcare and Reinforcement Learning

In the sphere of drugs, specially in personalized treatment, RL has shown capacity. For example, through constantly getting to know from patient statistics and enhancing the path of remedy as vital, RL algorithms can optimize remedy plans for patients with continual illnesses

Common Questions and Answers (FAQ)

1. What distinguishes deep learning from reinforcement learning?

While deep learning involves training neural networks, reinforcement learning concentrates on understanding the best behaviors to maximize rewards through interaction with the environment networks to identify data trends. Although deep learning, like deep reinforcement learning, can be used to reinforce learning, they deal with various kinds of problems.

2. What applications does reinforcement learning have in common technology?

Robotics, driverless cars, and recommendation systems (like Netflix and YouTube) are just a few examples of the common technologies that apply reinforcement learning. It enables these systems to gain knowledge from human interactions and gradually enhance their functionality.

3. What are reinforcement learning’s limitations?

A few drawbacks of reinforcement learning include that it requires a lot of data, is computationally demanding, and presents difficulties in striking a balance between exploration and exploitation. Furthermore, RL models may have trouble generalizing to novel scenarios and may be sensitive to alterations in the surrounding environment.

Key Takeaway

AI systems may be effectively trained to make the best decisions through trial and error using reinforcement learning. It has been incredibly successful in a variety of applications, ranging from gaming to medical. Even with its difficulties, RL algorithm development and research have a lot of potential to change several industries in the future. Real-world application and integration with other AI domains will be essential to realizing reinforcement learning’s full potential as it develops.