OpenAI, the business behind the popular ChatGPT artificial intelligence chatbot, announced Thursday it would distribute ten equal grants from a $1 million fund for democratic experiments to investigate how AI software could be managed to address bias and other concerns.

According to a blog post launching the initiative, recipients of $100,000 awards must show compelling frameworks for answering problems like whether AI should criticize public figures and what it should consider the “median individual” globally.

Critics say ChatGPT’s inputs skew it. For example, AI outputs have been racist or sexist. In addition, concerns are mounting that AI working with search engines like Alphabet Inc.’s (GOOGL.O), Google, and Microsoft Corp.’s (MSFT.O) Bing may provide misleading information.

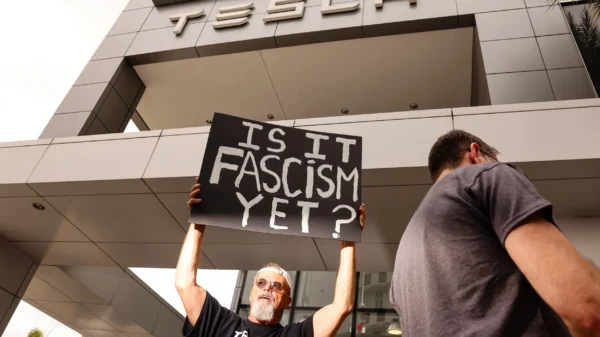

Microsoft’s $10 billion-backed OpenAI is pushing for AI regulation. However, it threatened to leave the EU over proposed rules. “The current draft of the EU AI Act would be over-regulating, but we have heard it’s going to get pulled back,” OpenAI CEO Sam Altman told Reuters. “They are still talking about it.” In addition, startup grants would limit AI research. AI engineers and others in the hot sector earn $100,000–300,000.

“AI systems should benefit all of humanity and be shaped to be as inclusive as possible,” OpenAI wrote in the blog post. “We are launching this grant program to take a first step in this direction.”

The San Francisco startup claimed the financing could influence its AI governance ideas, but no recommendations would be “binding.”

Altman has promoted AI regulation while updating ChatGPT and image-generator DALL-E. “If this technology goes wrong, it can go quite wrong,” he told a Senate subcommittee this month.

Microsoft, competing with OpenAI, Google, and startups to bring AI to consumers and companies, has also embraced comprehensive AI regulation.

AI’s potential to improve productivity cut labor costs, and its propensity to propagate misinformation or factual mistakes, or “hallucinations,” interests nearly every sector.

Several well-known hoaxes are AI-created. For example, a fake viral image of a Pentagon explosion briefly influenced the stock market. But, unfortunately, Congress has failed to regulate Big Tech despite cries for further regulation.