Overview

A vital component in the progress of scientific research is High-Performance Computing (HPC). HPC is characterized by its capacity to process large amounts of data quickly and efficiently, allowing scientists to solve complicated puzzles and recreate phenomena that would be impossible otherwise. The importance of HPC is evident across a wide range of scientific domains, including genomics and climate modeling, underscoring its function as the foundation of contemporary scientific advancement.

The Evolution of HPC Throughout History

HPC’s Initial Developments

With the introduction of supercomputers in the middle of the 20th century, HPC’s journey began. These early computers, which offered previously unheard-of computational power, like the IBM 7030 Stretch and the CDC 6600, set the groundwork for contemporary HPC. They revolutionized scientific computation by enabling more intricate calculations and detailed simulations, despite their limited capabilities by today’s standards.

What is high performance computing?

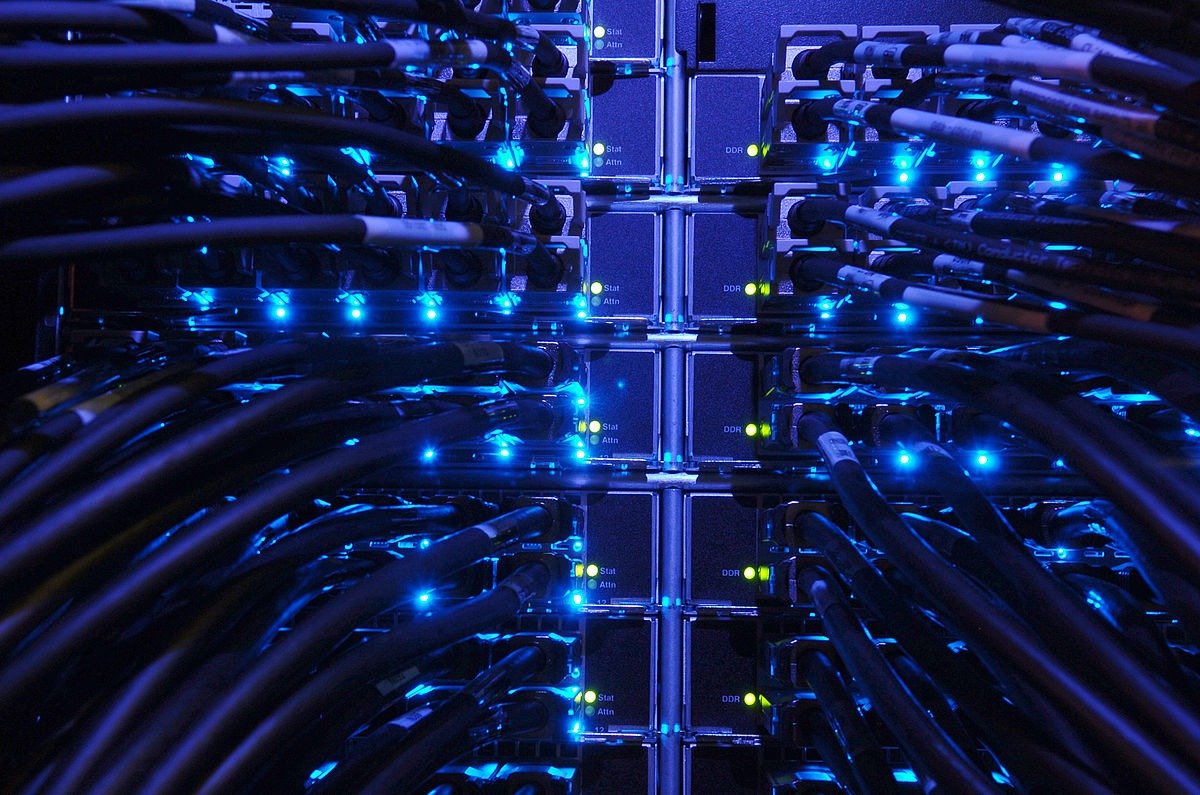

High performance computing (HPC) is the practice of aggregating computing resources to gain performance greater than that of a single workstation, server, or computer. HPC can take the form of custom-built supercomputers or groups of individual computers called clusters. HPC can be run on-premises, in the cloud, or as a hybrid of both. Each computer in a cluster is often called a node, with each node responsible for a different task. Controller nodes run essential services and coordinate work between nodes, interactive nodes or login nodes act as the hosts that users log in to, either by graphical user interface or command line, and compute or worker nodes execute the computations. Algorithms and software are run in parallel on each node of the cluster to help perform its given task. HPC typically has three main components: compute, storage, and networking.

HPC allows companies and researchers to aggregate computing resources to solve problems that are either too large for standard computers to handle individually or would take too long to process. For this reason, it is also sometimes referred to as supercomputing.

HPC is used to solve problems in academic research, science, design, simulation, and business intelligence. HPC’s ability to quickly process massive amounts of data powers some of the most fundamental aspects of today’s society, such as the capability for banks to verify fraud on millions of credit card transactions at once, for automakers to test your car’s design for crash safety, or to know what the weather is going to be like tomorrow.

Types of High Performance Computing (HPC)

High performance computing has three main components:

- Compute

- Network

- Storage

In basic terms, the nodes (compute) of the HPC system are connected to other nodes to run algorithms and software simultaneously, and are then connected (network) with data servers (storage) to capture the output. As HPC projects tend to be large and complex, the nodes of the system usually have to exchange the results of their computation with each other, which means they need fast disks, high-speed memory, and low-latency, high-bandwidth networking between the nodes and storage systems.

HPC can typically be broken down into two general design types: cluster computing and distributed computing.

Cluster computing

Parallel computing is done with a collection of computers (clusters) working together, such as a connected group of servers placed closely to one another both physically and in network topology, to minimize the latency between nodes.

Distributed computing

The distributed computing model connects the computing power of multiple computers in a network that is either in a single location (often on-premises) or distributed across several locations, which may include on-premises hardware and cloud resources.

In addition, HPC clusters can be distinguished between homogeneous vs heterogeneous hardware models. In homogenous clusters, all machines have similar performance and configuration, and are often treated as the same and interchangeable. There is a collection of hardware with different characteristics (high CPU core-count, GPU-accelerated, and more), and the system is best utilized when nodes are assigned tasks to best leverage their distinct advantages in heterogeneous cluster

Significant Achievers in HPC for Science

Important turning points in the development of HPC have had a big influence on scientific research. The 1980s saw the advent of parallel computing, which greatly increased computer efficiency by enabling the processing of numerous tasks at once. Another significant advancement that made it possible to create more precise and in-depth scientific models was the shift to petascale computing in the 2000s. In disciplines like climate science, where more processing power has produced more accurate climate models, these developments have been crucial.

High performance computing in the cloud

Traditionally, HPC has involved an on-premises infrastructure, investing in supercomputers or computer clusters. Over the last decade, cloud computing has grown in popularity for offering computer resources in the commercial sector regardless of their investment capabilities. Some characteristics like scalability and containerization also have raised interest in academia.[However security in the cloud concerns such as data confidentiality are still considered when deciding between cloud or on-premise HPC resources.

Current HPC Trends

Exascale Computing

Exascale computing, capable of processing a quintillion (10^18) calculations per second, marks the forefront of HPC. Due to its ability to simulate incredibly complex systems in previously unheard-of detail, this performance leap has the potential to completely transform scientific study. Exascale computing, for example, will make it possible to simulate the human brain or model entire ecosystems, opening up hitherto unattainable insights.

Quantum Computing Integration

Another revolutionary trend is the combination of HPC with quantum computing. Certain kinds of problems can be solved by quantum computers, which make use of the ideas of quantum mechanics, tenfold quicker than by classical computers. Researchers can solve issues that are now unsolvable, including the simulation of quantum materials or the optimization of large-scale systems, by combining quantum computing with conventional HPC.

In HPC, AI and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) are increasingly being integrated into HPC workflows. These technologies enhance HPC capabilities by automating data analysis and optimizing computational processes. For example, AI-driven algorithms can predict weather patterns more accurately by analyzing vast amounts of meteorological data, while ML models can expedite drug discovery by predicting molecular interactions.

Energy Efficiency and Sustainability

As the demand for computational power grows, so does the need for energy-efficient HPC systems. Recent trends focus on developing sustainable HPC solutions that minimize environmental impact. Innovations such as liquid cooling systems and the utilization of renewable energy sources are becoming increasingly popular, ensuring that HPC can meet future demands without compromising sustainability.

Key Applications of HPC in Scientific Research

Climate Modeling and Weather Prediction

HPC plays a key role in climate modeling and weather prediction. High-resolution models that replicate atmospheric and oceanic phenomena demand vast computer capacity. HPC allows scientists to predict weather with better accuracy and model long-term climate changes, contributing in the creation of policies to reduce the implications of climate change.

Genomics and Bioinformatics

In genomics and bioinformatics, HPC is important for analyzing huge volumes of genetic data. High-performance computing (HPC) is essential for tasks like genome sequencing, genetic mutation detection, and comprehension of intricate biological processes. It is possible to make significant advances in personalized medicine and the creation of novel treatments by processing and analyzing enormous datasets rapidly.

Science of Materials

Because HPC makes it possible to simulate material properties at the atomic and molecular levels, materials science research is expedited. Designing novel materials with certain properties, such enhanced conductivity or strength, requires this competence. The process of discovery is sped up by researchers using HPC-driven simulations to investigate a wider spectrum of materials more quickly.

Cosmology and Astrophysics

High-performance computing (HPC) greatly benefits astrophysics and cosmology by enabling the simulation of the most complicated events in the universe. HPC offers the processing capacity required to investigate the universe in previously unheard-of detail, from simulating the creation of galaxies to comprehending the behavior of black holes. These simulations provide insightful information about the universe’s beginnings and the basic rules of physics.

HPC’s Difficulties for Scientific Research

Performance and Scalability

Ensuring scalability and performance is a major difficulty in high-performance computing. HPC systems need to be able to manage increasing workloads with efficiency as computational problems get more sophisticated. To manage large-scale computations while maintaining high performance, this calls for developments in both hardware and software.

Storage and Management of Data

The enormous volumes of data produced by HPC present difficult management and storage issues. In order to guarantee that data can be accessed, processed, and stored effectively, effective data management procedures are essential. To meet these issues, innovations in data retrieval methods, storage technologies, and data compression are crucial.

The Price and Availability

For many academics, access to HPC infrastructure may be restricted due to its high cost and the level of skill needed to manage it. For wider access, democratization of high performance computing (HPC) via cloud-based technologies and cooperative initiatives is essential. These strategies seek to cut expenses while giving researchers the resources they require to use HPC effectively in their work.

HPC’s Potential in Scientific Research in the Future

Technological Progress in Hardware

Hardware technology developments in the future should improve HPC capabilities even further. Next-gen HPC systems harness semiconductor advances and neuromorphic computing, mirroring the human brain’s architecture. Even more complex simulations and analysis will be possible because of these advancements.

Advances in Algorithms and Software

To fully realize the potential of HPC, software and algorithm developments must be ongoing. Enhancing computation performance through new algorithms and optimizations boosts efficiency significantly. Further developments in programming languages and software tools will increase the accessibility of HPC to a wider group of academics.

Multidisciplinary Partnerships

Scientific research driven by HPC is increasingly relying on interdisciplinary collaborations. Collaborating with specialists from many domains enables researchers to address intricate issues more efficiently. These partnerships encourage the sharing of concepts and methods, which produces creative fixes and fresh insights.

FAQ

Q: What is HPC, or high performance computing?

A: High-performance computing is the practice of solving complicated computational problems quickly by utilizing supercomputers and parallel processing methods.

Q: What are the benefits of HPC for scientific research?

A: HPC enables detailed simulations, complex computations, and large-scale data analysis, advancing various scientific domains.

Q: What are some of the most recent HPC trends?

A: The integration of quantum computing, exascale computing, AI and ML, and a focus on sustainability and energy efficiency are some of the current trends.

Q: What difficulties does HPC face in scientific research?

A: The high cost and restricted accessibility to HPC infrastructure, data management and storage, scalability and performance, and data storage are among the challenges.

Q: What role will high-performance computing (HPC) play in science?

A: Hardware technology breakthroughs, software and algorithmic developments, and more interdisciplinary cooperation are all important aspects of HPC’s future.

Key Takeaway

- Scientific research is being revolutionized by high-performance computing, which offers the processing capacity required to solve some of the trickiest issues.

- Exascale computing and AI integration are pushing boundaries, promising significant advancements in future HPC capabilities.

- Notwithstanding obstacles, HPC’s ongoing development has enormous potential to propel innovations and scientific breakthroughs in a wide range of sectors.