After convening the first artificial intelligence (AI) safety meeting, British Prime Minister Rishi Sunak championed several historic accords. However, a worldwide framework for regulating the technology is still several years away.

Over two days of discussions including world leaders, corporate executives, and researchers, tech CEOs like Elon Musk and Sam Altman of OpenAI mingled with the likes of European Commission chair Ursula von der Leyen and U.S. Vice President Kamala Harris to explore the future regulation of artificial intelligence.

Two more summits were announced in South Korea and France next year. Leaders from 28 countries, including China, signed the Bletchley Declaration, a joint statement acknowledging the risks associated with the technology. The United States and Britain also announced plans to establish A.I. safety institutes.

There is still debate regarding the best way to govern A.I. and who should be in charge of these initiatives, despite general agreement that regulation is necessary.

Policymakers have placed a greater emphasis on the risks associated with quickly evolving A.I. since Microsoft-backed Open A.I. (MSFT.O) made ChatGPT publicly available last year.

Some experts have called for a stop to developing such systems, citing concerns that the chatbot’s unparalleled capacity to reply to cues with human-like fluency might lead to the system becoming autonomous and threatening humankind.

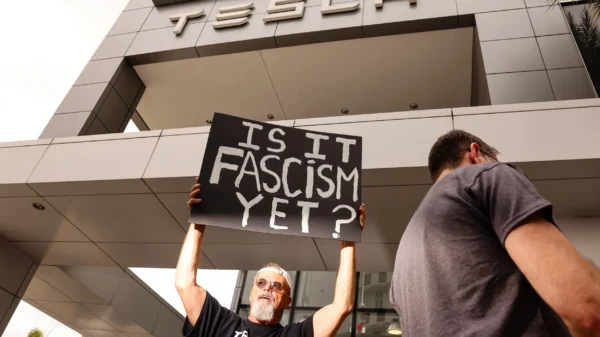

While Sunak spoke of being “privileged and excited” to welcome Tesla creator Elon Musk (TSLA.O), European politicians expressed concern about an excessive amount of technology and data owned by a small number of US-based corporations.

Bruno Le Maire, the French Minister of Finance and Economy, told reporters that “having just one single country with all of the technologies, all of the private companies, all of the devices, all of the skills, will be a failure for all of us.”

In addition to departing from the E.U., the U.K. has proposed a lax approach to A.I. regulation. Europe’s A.I. Act—which is almost finalized and would subject developers of “high-risk” apps to more stringent controls—is almost ready for finalization.

Vera Jourova is the Vice President of the European Commission. “I came here to sell our A.I. Act,” she said.

Jourova stated that although she did not anticipate other nations adopting the bloc’s legal framework ultimately, a worldwide consensus on regulations was necessary.

“If the democratic world will not be rule-makers, and we become rule-takers, the battle will be lost,” she stated.

Presenting a picture of harmony, participants noted

The three principal superpowers present: the U.S., the E.U.,

China attempted to establish its supremacy.

While the meeting focused on existential dangers, some said Harris had outshined Sunak when the U.S. government created its own A.I. safety institution, precisely as Britain had done a week earlier, and when Harris gave a lecture in London stressing the short-term hazards of the technology.

Attendee Nigel Toon, CEO of British AI company Graphcore, remarked, “It was fascinating that just as we announced our AI safety institute, the Americans announced theirs.”

British authorities hailed China’s attendance at the conference and its decision to ratify the “Bletchley Declaration” as achievements.

China is eager to collaborate with all parties on AI governance, according to the deputy minister of science and technology in China. Wu Zhaohui, however, signaled a rift between China and the West by telling delegates, “Countries, regardless of their size and scale, have equal rights to develop and use AI.”

According to his ministry, the Chinese minister participated in the ministerial roundtable on Thursday. On the other hand, he abstained from the second day’s public festivities.

A recurrent issue of the private talks, brought up by several participants, was the possible dangers of open-source artificial intelligence (A.I.), which allows anybody to play with the technology’s source code freely.

Some scientists have issued a warning, claiming that terrorists may utilize open-source models to develop chemical weapons or even a superintelligence uncontrollable by humans.

Speaking with Sunak during a live event on Thursday in London, Musk predicted that open-source AI would eventually catch up to, if not surpass, human intellect. I’m not sure how best to handle it.”

Leading an A.I. pioneer “state of the science” assessment commissioned as part of the Bletchley Declaration, Yoshua Bengio told Reuters that open-source A.I. vulnerabilities were a top concern.

“It could be modified for malicious purposes, or it could fall into the hands of bad actors,” he stated. These robust systems cannot be made available to the public under an open-source license and maintain appropriate public safety measures.”