San Francisco-based artificial intelligence startup Anthropic made waves this week with the official launch of its much-anticipated conversational chatbot, Claude. Valued at $4.1 billion following a $300 million funding round last year, Anthropic has positioned itself as a major rival to OpenAI, creator of the popular — and sometimes controversial — chatbot ChatGPT.

Claude aims to match or even surpass the capabilities of ChatGPT, while avoiding some of the issues that have plagued its rival, such as a tendency to generate false or biased information. To achieve this, Anthropic has focused on what it calls “self-aware” AI that is cognizant of when it lacks knowledge or needs to correct previous statements.

The company opened access to Claude via a waitlist on its website, allowing select users to begin conversing with the bot. So far, early reactions indicate Claude may live up to the hype and pose a legitimate alternative to ChatGPT.

“We designed Claude to be helpful, harmless, and honest using a technique called constitutional AI,” said Dario Amodei, Anthropic’s research director. “We believe transparent AI systems like Claude are key to solving difficult real-world problems.”

Anthropic, which was founded in 2021 by former OpenAI researchers, has kept most details about Claude under wraps until now. This stealthy approach stands in contrast to the very public beta testing of ChatGPT that began late last year. However, Anthropic believes its more cautious methodology will pay off.

“We’ve been deliberate in our approach to development and safety testing because we want Claude to be helpful to as many people as possible on day one,” said Daniela Amodei, Anthropic’s head of safety.

Early users have reported Claude provides informative, nuanced responses lacking the inaccuracies that sometimes crop up with ChatGPT. Its dialogues appear more natural and it will admit knowledge gaps, rather than attempting to fake its way through an answer.

Claude also integrates safety measures intended to avoid harmful, unethical or dangerous content. The bot will refuse inappropriate requests and can be steered back on track by reminding it of its prime directives to be helpful, harmless and honest.

Anthropic stresses Claude will continuously improve thanks to a technique called Constitutional AI. This involves setting initial parameters for ethical behavior, then allowing the system to learn safe, socially positive responses through machine learning techniques as it interacts with more users.

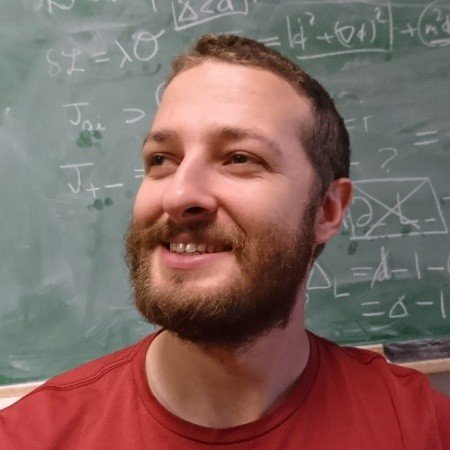

The startup boasts an impressive roster of talent and financial backing. Former OpenAI researchers Dario Amodei, Tom Brown, Chris Olah, Sam McCandlish and Jack Clarke lead Anthropic’s research team. Prominent AI safety researchers such as Amanda Askell have also joined the company.

Photo: Dario Amodei\ Hertz Foundation

Photo: Sam McCandlish\ LinkedIn

Investors include tech moguls like Jaan Tallinn, one of Skype’s original developers, and Sam Bankman crypto entrepreneur-Fried. In addition to its massive $300 million funding round last year, Anthropic raised $124 million in January 2022.

Anthropic has not disclosed a monetization model yet, but will likely eventually charge for certain advanced features. For now, the company is focused on expanding its beta user base and ensuring a safe, responsible public launch of Claude.

Photo: Sam Bankman\ Linkedln

ChatGPT’s astounding capabilities and viral popularity have sparked concerns about misuse of generative AI. Microsoft, which is investing billions in OpenAI, has unveiled plans to curb issues like AI-generated misinformation and online harassment.

Critics argue these reactive measures come too late, after ChatGPT’s release allowed harmful uses like impersonating others and producing disinformation. Anthropic hopes to preempt such issues with its safety-first approach.

“We believe AI systems as capable as Claude need to be made safe by design, not safe after the fact,” said Daniela Amodei. “We look forward to continuing our careful, comprehensive approach to make Claude as helpful and harmless as possible.”

For now, the waitlist to try Claude remains long, as Anthropic allows more users on gradually to maintain quality control. But this limited rollout marks a major milestone for the promising startup.

With its meticulous methodology and focus on safety, Anthropic aims to steer generative AI in a more cautious, ethical direction. As Claude becomes available to more users, it could pioneer a new era of responsible, transparent artificial intelligence.