Elon Musk’s X restructuring curtails disinformation research and spurs legal fears. Nearly a dozen interviews and a survey of upcoming projects reveal that social media researchers have canceled, halted, or altered more than 100 studies involving X, previously Twitter, in response to Elon Musk’s measures restricting access to the social media network.

Researchers told Reuters that Musk’s limitations on vital data collection techniques on the international platform have hindered the capacity to track the source and dissemination of false information during real-time events like the Israeli airstrikes in Gaza and the attack on Israel by Hamas.

The most significant technique was a platform that provided researchers with monthly data on around 10 million tweets. According to an email that Reuters obtained, Twitter informed researchers in February that it would no longer offer free academic access to this application programming interface (API) as part of a tool redesign.

The Coalition for Independent Technology Research surveyed 167 academic and civil society researchers in September at Reuters’ request, and the results, for the first time, quantify the number of studies canceled due to Musk’s policy.

Additionally, it reveals that most poll respondents worry about facing legal action from X over their research findings or data usage. The concern arises from X’s lawsuit filed in July against the Center for Countering Digital Hate (CCDH), which released negative findings on the platform’s moderation of material.

A request for comment from Musk was unanswered, and a representative of X declined to comment. The business has previously stated that “healthy” articles account for almost all content views.

Advertisers have been leaving X during Musk’s first year of ownership because they fear their advertising will be placed next to offensive material. Since Musk’s takeover, X’s U.S. ad income has decreased by at least 55% year over year every month, according to a prior report from Reuters.

The study revealed 27 instances when researchers switched platforms, 30 studies that were discontinued, and 47 initiatives that were postponed. It also disclosed 47 active initiatives. However, several academics pointed out this would limit their capacity to gather new data.

Research on hate speech and subjects that have drawn international regulatory attention are among the studies that are impacted. In one instance, a project investigating kid safety on X was shelved. An Australian regulator recently penalized the platform for not assisting with an investigation into policies against child abuse.

Several respondents to the Coalition’s poll, including the researcher for the project put on hold, were asked to remain anonymous. According to a survey author, researchers could try to conceal current experiments or evade X’s criticism.

Regulators in the European Union are also looking at how X handled misinformation; this was the subject of many independent research studies that were shelved or halted, according to the survey.

According to Josephine Lukito, an assistant professor at the University of Texas at Austin, “users on (X) are vulnerable to more hate speech, more misinformation, and more disinformation” due to their diminished capacity to research the site.

She contributed to the research survey for the Coalition, an international organization that strives to further the study of how technology affects society and has more than 300 members.

The Coalition’s members and email lists for other academic groups, including specialists in social media and political communication, received the poll in mid-September.

Under new, stringent internet regulations that went into force in August, the EU is looking into X, which highlights the possible regulatory risk to the San Francisco-based business. Penalties for every infraction might reach 6% of worldwide income.

According to a representative for the EU Commission, the agency is now keeping an eye on X and other major platforms’ adherence to legal requirements, which include granting access to publicly available data to academics who satisfy specific needs.

AFFORDABLE PRICE

Before Musk’s $44 billion acquisition of Twitter, the social media platform was mainly the focus of research on social media since it provided a valuable venue for current affairs and political information. Four researchers informed Reuters that its data was freely accessible.

However, Musk started cutting expenses and firing thousands of workers, including those who worked on the research tools, as soon as he entered Twitter’s headquarters.

X currently offers three premium levels of the API, with prices ranging from $100 to $42,000 per month. The less expensive tiers provide less data than previously freely available to academics. Almost all of the researchers that Reuters contacted said that they were unable to pay the fees.

The decision to discontinue free academic API access, according to a former employee who wished to remain anonymous for fear of retaliation from Musk, was made due to an immediate need to concentrate on increasing revenue and reducing expenses following Musk’s acquisition.

The majority of poll participants said that they had halted or canceled their platform research due to the API changes.

Research is seriously hampered ahead of 2024, a significant election year worldwide, due to the prohibitive expense of paying to obtain less data than what was previously available, according to Lukito.

University of Notre Dame engineering professor Tim Weninger said that his team has been “flying blind” in investigating China-related information activities as they have not had access to data from the API, which is too expensive.

According to other academics who spoke with Reuters, their choices for studying X are now restricted to manual post-analysis.

There are restrictions on the data that researchers may obtain from other social media sites. In a blog article for Tech Policy Press, Megan A. Brown, a researcher at New York University, noted that although the short-form video app TikTok released an academic research API earlier this year, its restrictive terms and conditions limit its utility for academics.

The owner of Facebook and Instagram, Meta Platforms, has collaborated on studies with outside academics; this does not replace independent research but demonstrates Meta’s openness to work with others, according to Lukito.

RESEARCH PROBLEMS

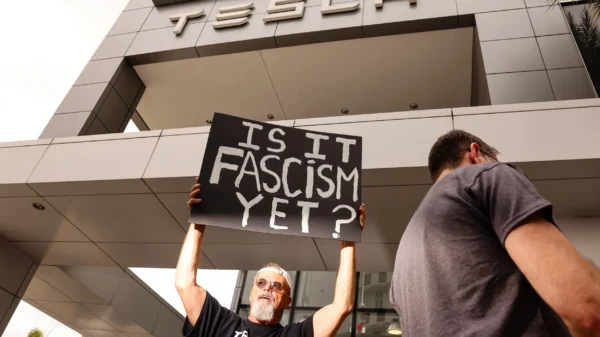

I was following Musk’s acquisition; the CCDH—a group that claims to combat hate speech and misinformation—released many reports claiming that the social media platform both failed to monitor and made money off of offensive content.

In July, X filed a lawsuit against CCDH, alleging that the company had inappropriately accessed platform data and had propagated misleading information on X’s moderation.

CCDH Chief Executive Imran Ahmed stated, “Musk wants to silence any criticism of the way he does business,” and the organization stood by its reports.

104 out of 167 respondents to the Coalition for Independent Technology study poll expressed concern about their projects’ potential for legal action because they used data or study findings.

In a study published last year, Bond Benton, an associate professor at Montclair State University, found that hate speech on Twitter increased in the hours following Musk’s takeover. “The move against the CCDH communicates to researchers looking at misinformation and hate speech on online platforms that there is intrinsic liability in publicly disseminating findings,” Benton said.

One researcher—who wished to remain anonymous—was looking at how rape is discussed on X and told the poll they were concerned about the integrity of data acquired without access to the API and potential legal ramifications. The study was redirected to look at a different social networking platform, according to the researcher.

“Freedom of speech, not reach” is a new policy that Musk and X CEO Linda Yaccarino have stated. It limits the circulation of some postings but does not remove them from the site.

X claims that 99% of the material users view on the site is “healthy,” a claim made in July and ascribed to estimations from Sprinklr. This software provider assists businesses in tracking online consumer sentiment and market trends.

When Reuters asked for confirmation of the numbers mentioned in the July article, a representative for Sprinklr—labeled as an official partner of Twitter—stated that “any recent external reporting prepared by Twitter/X has been done without Sprinklr’s involvement.”

The spokesman cited a blog post from March that claimed three times as many views were given to hazardous postings on X as to non-toxic ones.