Artificial intelligence is increasingly becoming a part of everyday life, from assisting with homework to influencing workplaces and even serving as companions in personal relationships. Despite its growing presence, there is still no comprehensive framework to ensure that AI is developed and used safely. In an effort to address this gap, two state attorneys general have announced a new initiative to establish safeguards for AI technology, with the participation of some of the largest companies in the tech sector.

North Carolina Attorney General Jeff Jackson, a Democrat, and Utah Attorney General Derek Brown, a Republican, revealed on Monday the formation of the AI Task Force. The group already counts major players like OpenAI and Microsoft among its participants, and the attorneys general anticipate additional state regulators and technology companies joining in the coming months. The task force aims to develop foundational safety measures that AI developers should implement, particularly to protect children and other vulnerable users, while also identifying new risks as the technology continues to evolve.

Currently, there is no overarching federal law that regulates AI in the United States, leaving much of the responsibility to states. Some federal lawmakers have even sought to limit regulation of the technology. Earlier this year, Jackson and Brown were part of a coalition of 40 attorneys general who successfully opposed a provision in Republicans’ sweeping tax and spending cuts package that would have imposed a ten-year moratorium on states enforcing AI regulations. While federal legislation on AI is limited, the Take It Down Act, passed earlier this year, specifically targets non-consensual deepfake pornography.

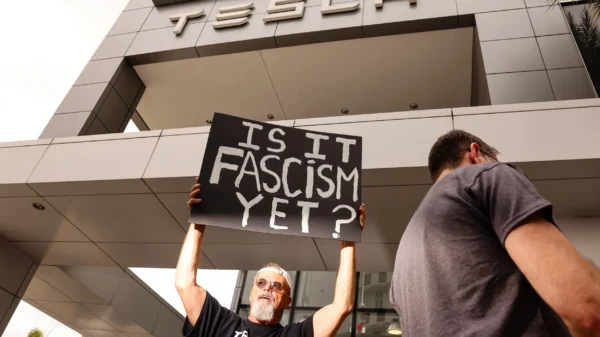

Concerns over AI safety have been growing in recent months, fueled by reports linking AI use to delusions, self-harm, and exposure to inappropriate content. Companies including OpenAI and Meta, Facebook’s parent company, have struggled to balance the technology’s utility with protecting younger users. “They did nothing with respect to social media, nothing with respect for internet privacy, not even for kids, and they came very close to moving in the wrong direction on AI by handcuffing states from doing anything real,” Jackson told CNN in an exclusive interview, underscoring the urgency for proactive measures.

Safety approaches among major AI companies are already diverging. OpenAI’s CEO Sam Altman recently indicated that investments in child safety protections would allow adult users to have more freedom, including engaging in erotic conversations with AI. In contrast, Microsoft’s AI CEO Mustafa Suleyman stated that the company will not allow sexual or romantic conversations with AI, even for adults. Suleyman emphasized that Microsoft aims to create an AI platform that parents can trust for their children without requiring a separate experience for young users.

By establishing the AI Task Force, Jackson and Brown hope to foster collaboration between regulators and industry leaders to mitigate risks while promoting innovation. “This effort reflects a shared commitment to harness the benefits of artificial intelligence while working collaboratively with stakeholders to understand and mitigate unintended consequences,” said Kia Floyd, Microsoft’s general manager of state government affairs. She added that the partnership would help promote both innovation and consumer protection, ensuring that AI technology serves the public good.

While any guidelines developed by the task force would initially be voluntary, the initiative carries an important secondary benefit. It brings together state law enforcement leaders to monitor AI developments and risks collectively, making it easier for them to coordinate legal action if companies harm consumers. This collaborative approach differentiates the task force from groups of think tanks or industry-led consortia that have previously issued voluntary AI safety principles.

Jackson emphasized that congressional action on AI legislation remains critical, but in the absence of federal leadership, states are stepping in to fill the void. “Congress has left a vacuum, and I think it makes sense for attorneys general to try to fill it,” he said.

The creation of the AI Task Force signals a new phase in the regulation of artificial intelligence in the United States. It aims not only to set safety standards but also to create a forum for state and industry cooperation, ensuring that as AI becomes more integrated into daily life, its risks are understood, monitored, and managed responsibly. By focusing on children, vulnerable users, and the broader public, the initiative seeks to balance innovation with accountability, paving the way for safer, more ethical AI deployment across society.